Translate English to French/German

Use our custom Transformer model trained on WMT17 to translate English sentences into French/German.

View on GitHub

Custom Transformer Inference

This demo uses a PyTorch Transformer trained from scratch with subword tokenization and beam search decoding.

- Model: Transformer with beam/greedy decoding

- Trained on: WMT16 EN→FR/DE

- Decoding: Configurable between greedy and beam search

- Deployed on: Local VM via Flask API

View Model Training & Comparison Charts

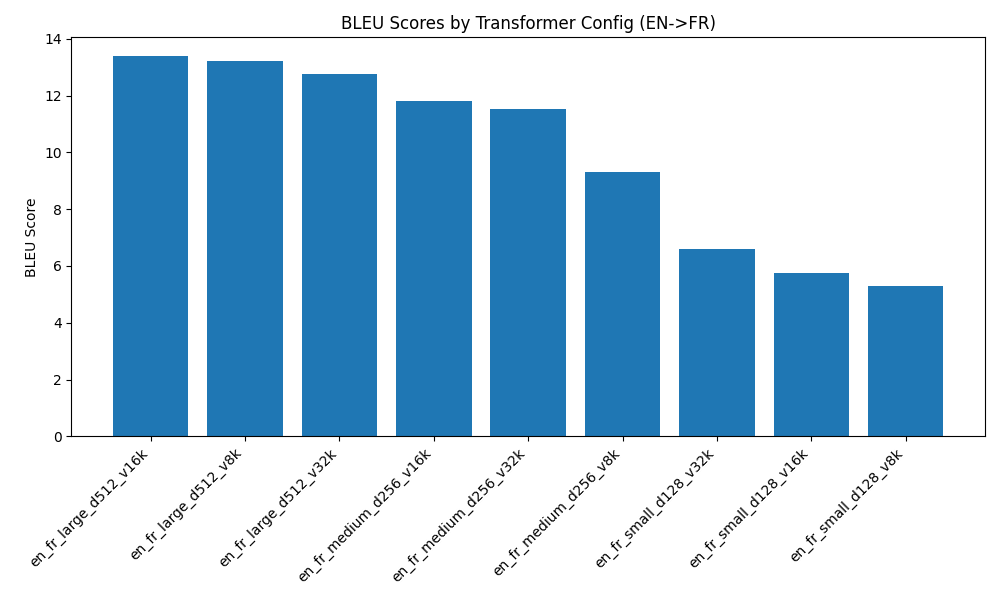

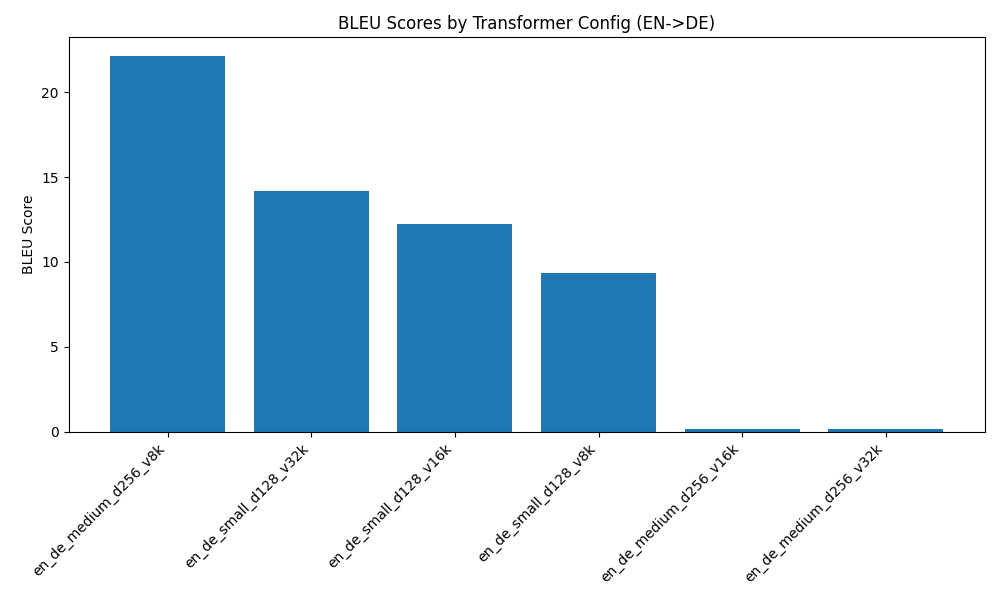

Model Size & Vocab Comparison

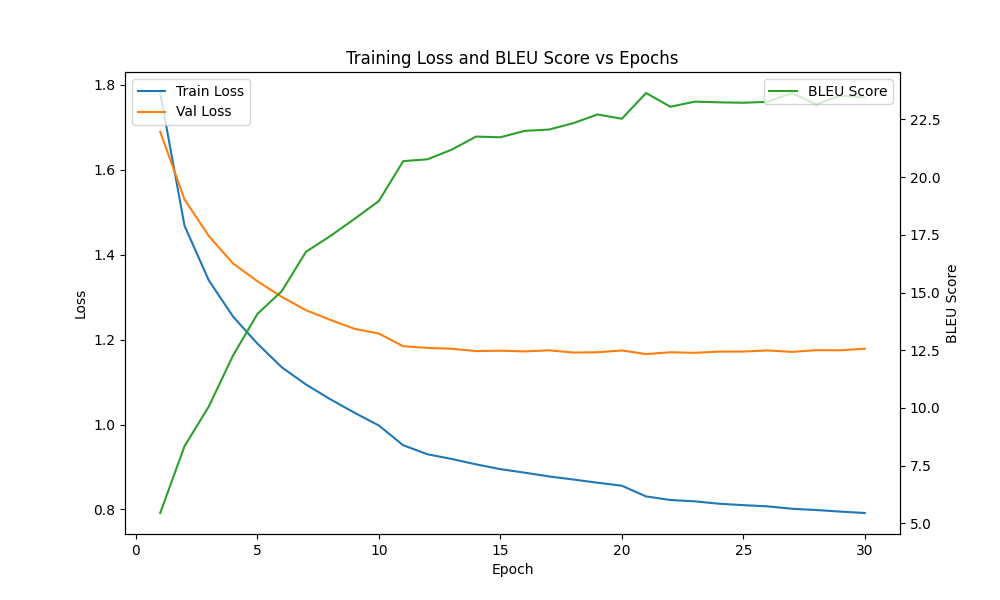

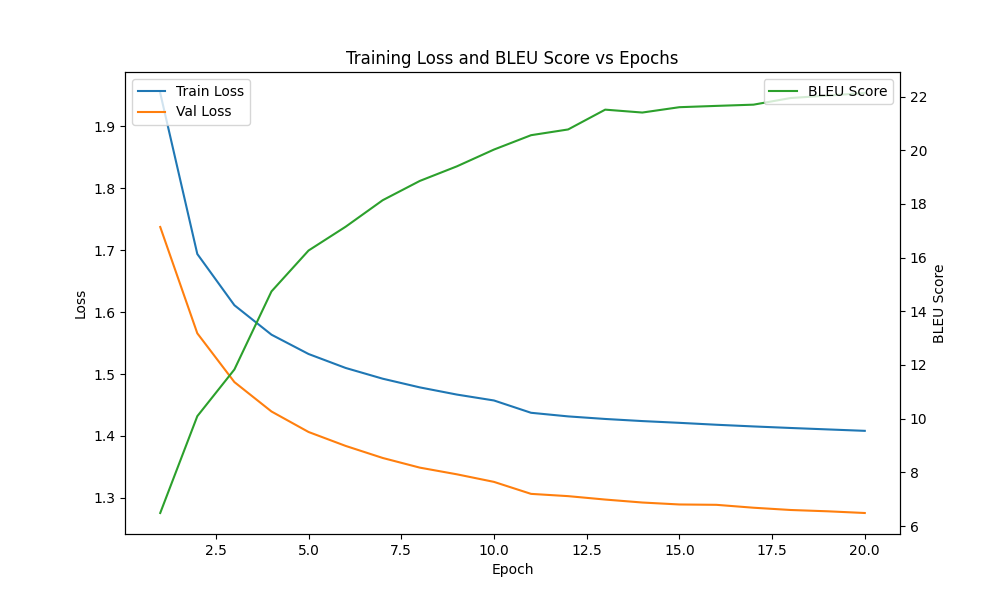

Training Metrics (Loss, BLEU)

BLEU Score Summary Tables

EN → FR

EN → DE

Transformer Architecture Diagram

This diagram illustrates the custom Transformer architecture used in our EN→FR/DE model, including Encoder/Decoder blocks, multi-head attention, and positional encodings.